Programmer and attorney Matthew Butterick have filed lawsuits against Microsoft, GitHub, and OpenAI. He argued that GitHub Copilot infringes on programmers’ rights and breaches the provisions of open-source licenses.

In June 2022, GitHub Copilot, an AI-based programming tool, will be made available. It will leverage OpenAI Codex to produce real-time source code and function recommendations in Visual Studio.

The technology can convert natural language into code snippets in dozens of programming languages. And was trained using machine learning on billions of lines of code from public repositories.

Clipping authors out

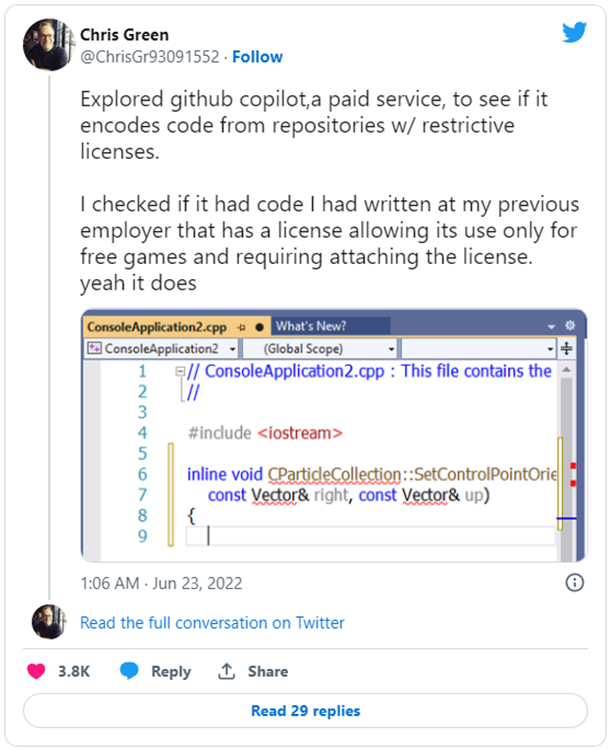

While Copilot can speed up the writing of code and make software development easier, experts are concerned that it may not be in compliance with license attributions and restrictions because it uses open-source code that is available to the public.

The GPL, Apache, and MIT licenses, as well as other open-source licenses, demand author acknowledgment and specify specific copyrights.

The citation is not given even when the snippets are longer than 150 characters and taken directly from the training set because GitHub Copilot is doing away with this component.

The legal ramifications of this strategy were revealed after the AI tool’s release, and some programmers have even gone so far as to label it “open-source laundering.”

According to Joseph Saveri, the law firm representing Butterick in the litigation, “It appears Microsoft is benefitting from others’ efforts by violating the terms of the underlying open-source licenses and other legal requirements.”

To make matters worse, there have been reports of Copilot accidentally publishing secrets like API keys in public repositories, where they were later included in the training set.

The Breaches

Butterick claims that the development feature also breaches the following rules in addition to the licensing violations:

- Terms of service and privacy policies for GitHub,

- The removal of copyright-management information is prohibited by DMCA 1202,

- the Consumer Privacy Act of California,

- and further legislation causing the corresponding legal claims.

The Northern District of California U.S. District Court received the complaint, which requested approval of $9,000,000 in statutory damages.

According to the complaint, “GitHub Copilot violates Section 1202 three times each time it distributes an unlawful Output (distributing the Licensed Materials without (1) acknowledgment, (2) copyright notice, and (3) License Terms)”.

“Therefore, assuming each user only encounters one Output that transgresses Section 1202 over the course of their use of Copilot (up to fifteen months for early adopters), GitHub and OpenAI will have transgressed the DMCA 3,600,000 times. $9,000,000 is the equivalent of the minimum statutory damages of $2500 for each breach.”

Harming open-source

In a blog post in October, Butterick talked about the harm that Copilot could cause to open-source groups.

The programmer stated that by giving users code snippets and never disclosing who wrote the code they are using. The incentive for open-source contributions and collaboration is destroyed virtually.

Butterick said, “Microsoft is establishing a new walled garden that will prevent programmers from learning about established open-source groups.”

“This strategy will eventually suffocate these communities. The open-source projects themselves—their source repositories, issue trackers, mailing lists, and discussion boards—will lose user interest and involvement.”

Butterick worries that Copilot may eventually lead to the deterioration of open-source communities leading the code in the training data.